OpenAI has unveiled two new artificial intelligence models that incorporate logical reasoning: gpt-oss-120b and gpt-oss-20b. These are the first models released since GPT-2, which debuted over five years ago. They are available for free on Hugging Face and are designed for developers and researchers looking to create their own solutions based on open models.

The models differ in power and hardware requirements:

- gpt-oss-120b — a larger and more powerful model capable of running on a single NVIDIA GPU;

- gpt-oss-20b — a lighter version that can operate on a standard laptop with 16GB of RAM.

OpenAI's goal is to offer an American open AI platform as an alternative to the growing influence of Chinese laboratories such as DeepSeek, Qwen (Alibaba), and Moonshot AI, which are actively developing their own powerful open models.

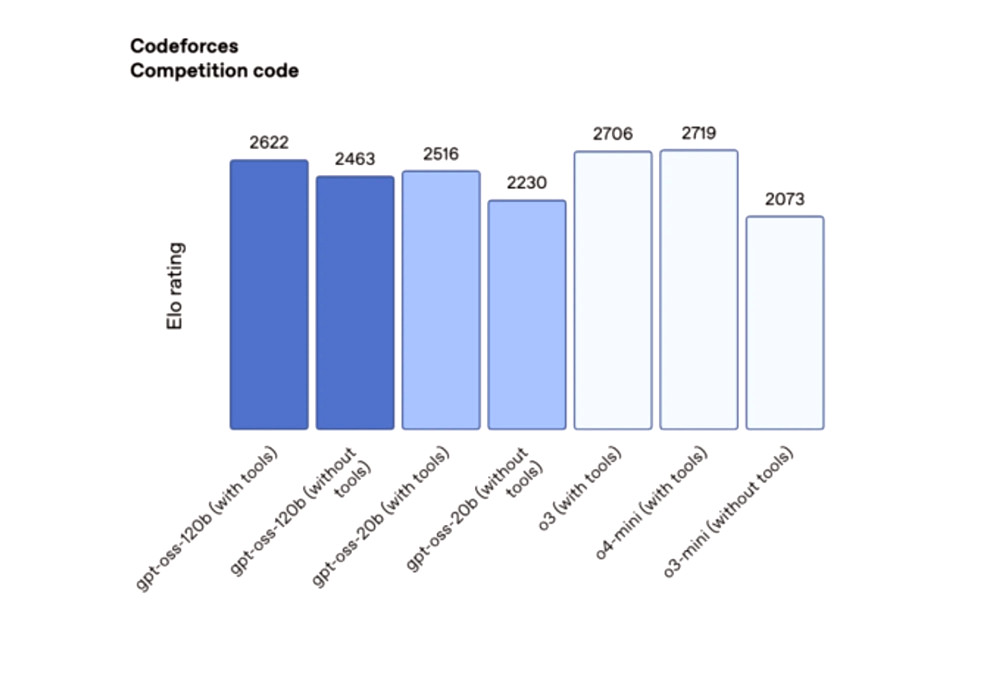

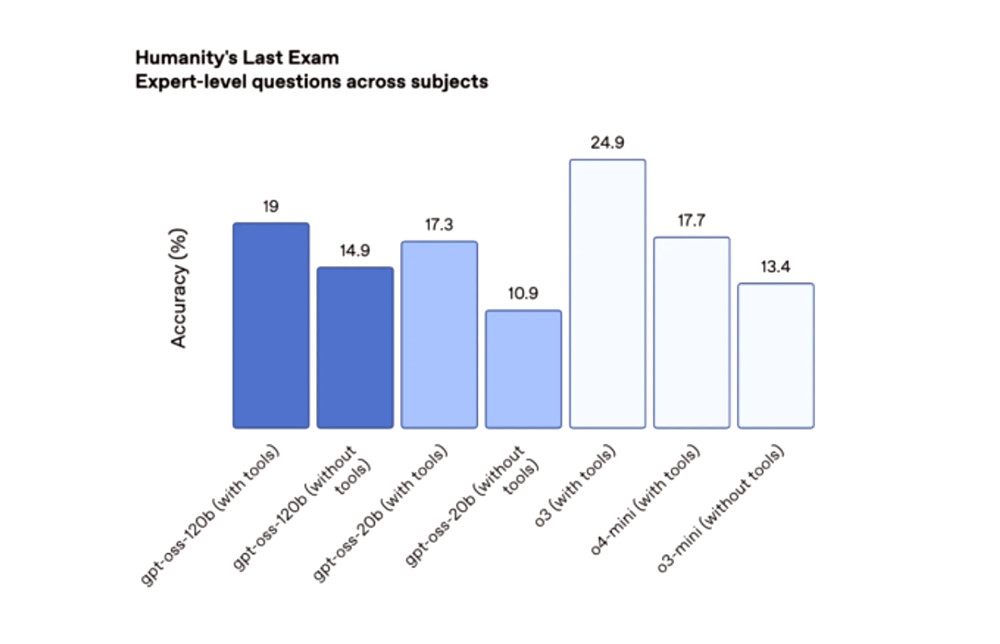

In testing, on the competitive coding platform Codeforces, the 120b model scored 2622 points, while the 20b model scored 2516, surpassing DeepSeek R1 but falling short of the closed models o3 and o4-mini. On the challenging Humanity’s Last Exam (HLE), the 120b achieved 19%, while the 20b reached 17.3%, outperforming other open models but still trailing behind o3.

The new models were trained using a methodology similar to that of OpenAI's closed models, employing a mixture-of-experts (MoE) approach that activates only a portion of parameters for each token, enhancing efficiency. Additional RL fine-tuning has allowed the models to build chains of logical reasoning and invoke tools such as web search or Python code execution.

The models operate solely with text and do not generate images or audio. They are distributed under the Apache 2.0 license, permitting commercial use without the need for approval from OpenAI, although the training data remains proprietary due to copyright risks.

The launch of gpt-oss aims to strengthen OpenAI's position within the developer community while responding to political pressure from the US to enhance the role of open American models in global competition.